A groundbreaking study has recently discovered a remarkable shift in public perception towards artificial intelligence (AI) and human decision-makers. This study, conducted by researchers from the University of Portsmouth and the Max Planck Institute for Innovation and Competition, delved into society’s preference for algorithmic versus human-based decisions in redistributive scenarios. Published in the journal Public Choice, the research sheds light on the implications of this newfound preference for the integration of technology in decision-making processes.

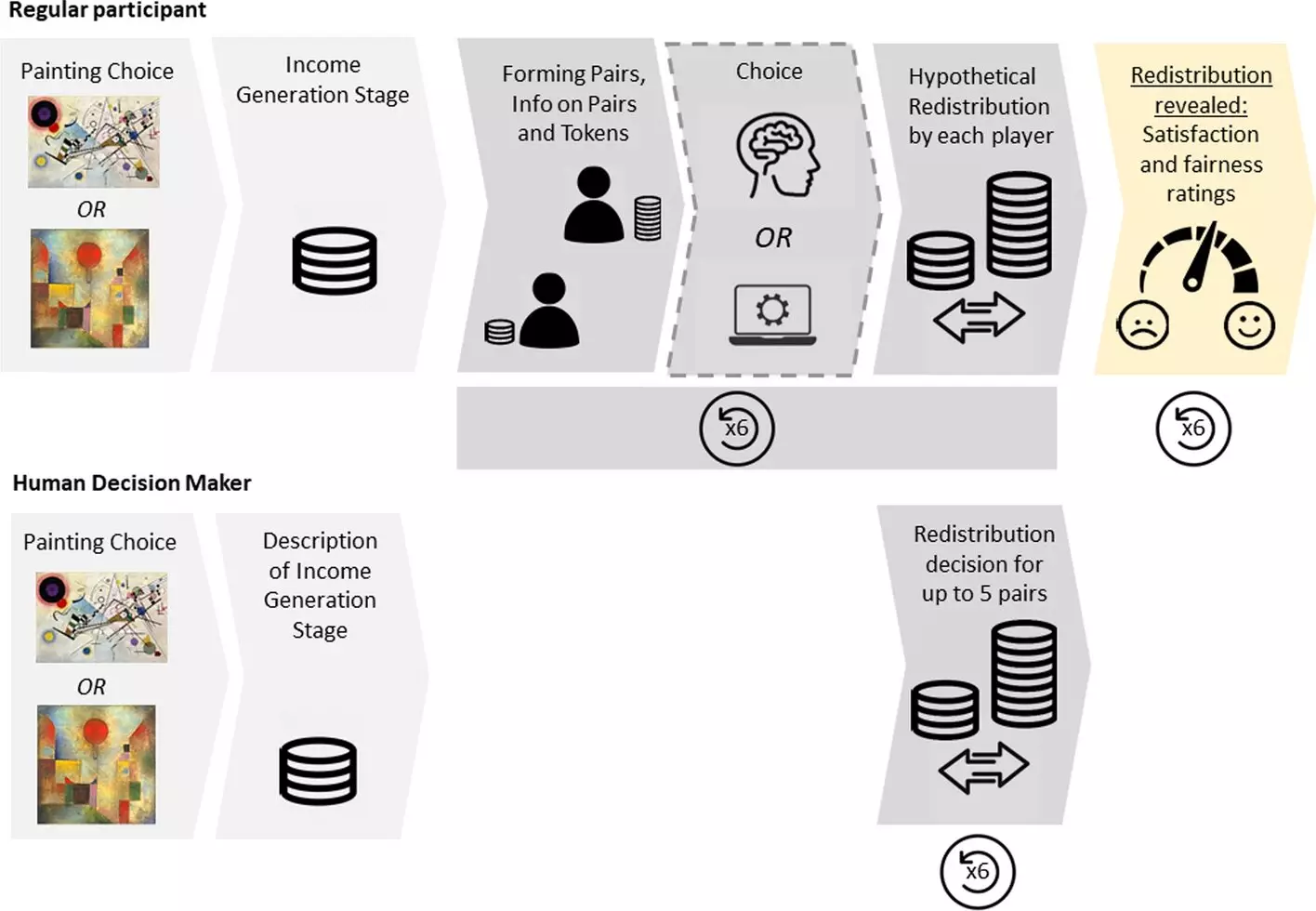

An online decision experiment was designed by the researchers to gauge participants’ inclination towards AI or human decision makers in redistributive scenarios. More than 200 participants from the United Kingdom and Germany were presented with a scenario where the earnings of two individuals needed to be redistributed. Surprisingly, over 60% of the participants opted for AI over human decision-makers, regardless of the potential presence of discrimination. This marked a significant departure from conventional beliefs regarding human involvement in decisions involving fairness and morality.

Despite the clear preference for AI in redistributive decisions, participants expressed less satisfaction with the decisions made by algorithms compared to those made by humans. The perception of fairness played a crucial role in shaping participants’ subjective ratings of the decisions. It was observed that individuals tended to evaluate decisions based on their own material interests and preconceived notions of fairness. While participants were willing to tolerate a reasonable deviation from their ideal decision, any divergence from established fairness principles led to dissatisfaction and negative reactions.

The Role of Transparency and Accountability

Dr. Wolfgang Luhan, the lead researcher of the study and an Associate Professor of Behavioral Economics at the University of Portsmouth, highlighted the importance of transparency and accountability in the acceptance of AI decision-makers. The study emphasized that while people are receptive to the idea of algorithmic decision-making due to its potential for unbiased decisions, the actual performance of algorithms and their ability to explain decision-making processes are critical factors in gaining public trust. Particularly in contexts involving moral decision-making, ensuring transparency and accountability of algorithms is paramount.

The findings of this study have significant implications for various sectors, including business and public governance. With the increasing use of AI in hiring, compensation, policing, and parole strategies, understanding public preferences towards algorithmic decision-makers is essential. The study suggests that as algorithms improve in consistency and transparency, there may be growing support for their use in morally significant areas. This shift towards embracing AI in decision-making processes may lead to more efficient and unbiased outcomes in the future.

The study on the preference for AI over humans in redistributive decisions highlights the evolving attitude of society towards technology in decision-making. While the acceptance of AI decision-makers is growing, ensuring transparency, accountability, and alignment with fairness principles are critical in gaining public trust and support. As technology continues to reshape various aspects of society, understanding and adapting to these changing preferences will be key in harnessing the full potential of artificial intelligence.

Leave a Reply