In recent years, the field of artificial intelligence (AI) has made significant strides, with deep neural networks (DNNs) being widely used to address various real-world tasks. However, as highlighted in a recent study by researchers at the University of Notre Dame, the fairness of these AI models has come under scrutiny. The study, published in Nature Electronics, sheds light on how hardware systems can influence the fairness of AI, particularly in high-stakes domains like healthcare. This critical analysis will delve into the key findings of the research and evaluate the implications for the future development of fair AI systems.

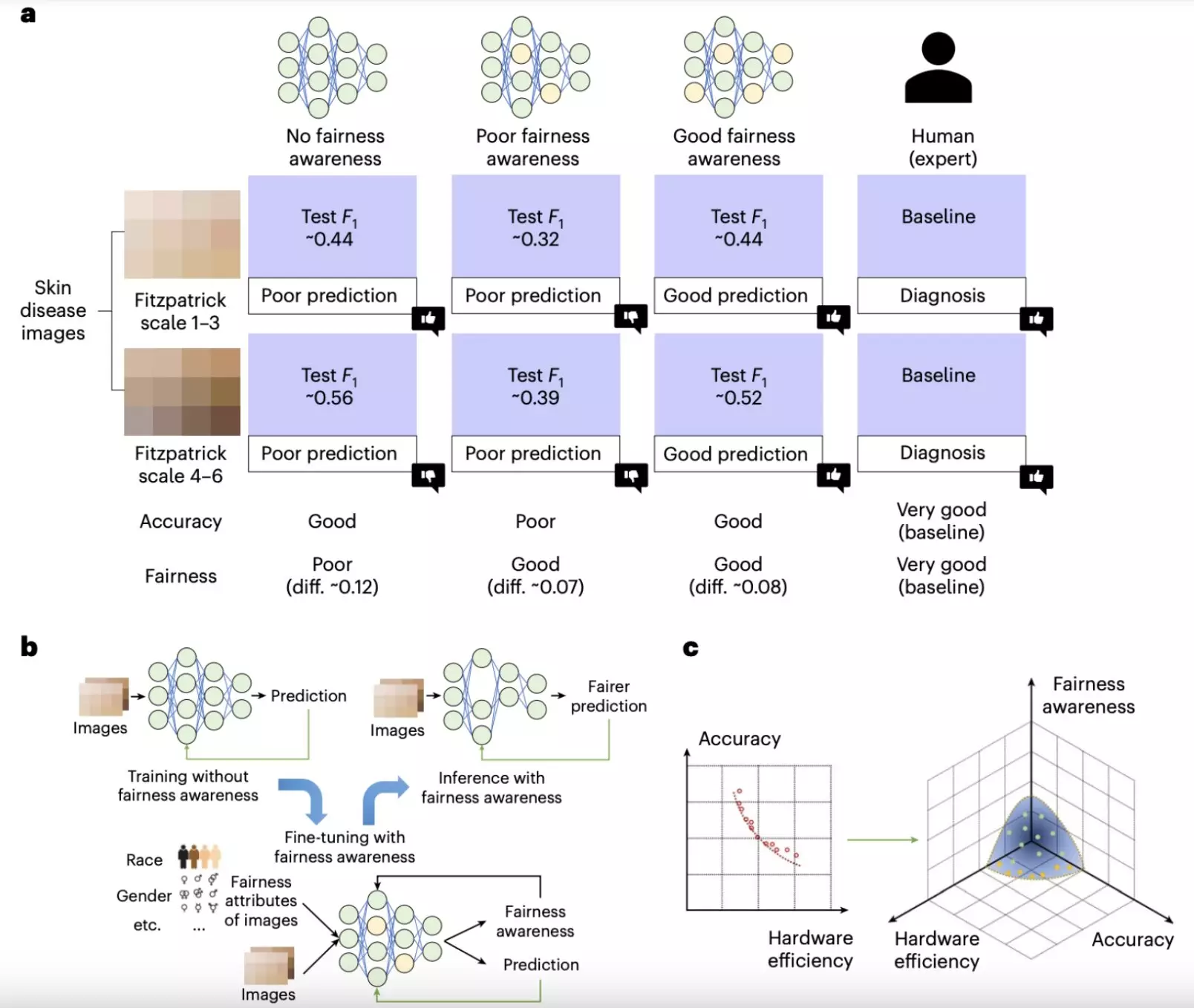

The study led by Yiyu Shi and his colleagues aimed to investigate the role of hardware design in shaping the fairness of DNNs. Contrary to the prevailing focus on algorithmic fairness, the researchers sought to uncover how emerging hardware architectures, such as computing-in-memory (CiM) devices, could impact the fairness of AI models. Through a series of experiments, they discovered that hardware-induced non-idealities, like device variability, could lead to trade-offs between accuracy and fairness in AI systems.

One of the key revelations from the study was that larger, more complex neural networks tended to exhibit greater fairness, but at the expense of requiring more advanced hardware. This insight underscores the intricate interplay between AI model structures and the hardware platforms on which they are deployed. The researchers proposed strategies to enhance AI fairness, such as compressing larger models to reduce computational demands while maintaining performance.

Furthermore, the study highlighted the need for noise-aware training strategies to improve the robustness and fairness of AI models under varying hardware conditions. By introducing controlled noise during training, developers can mitigate the impact of device variability and enhance the overall fairness of AI systems. These findings emphasize the importance of considering hardware constraints in addition to software algorithms when designing fair AI solutions, particularly for sensitive applications like medical diagnostics.

Looking ahead, the research team plans to delve deeper into the intersection of hardware design and AI fairness by developing cross-layer co-design frameworks. These frameworks will optimize neural network architectures for fairness while accommodating hardware constraints, paving the way for more equitable AI systems. Additionally, the researchers aim to explore adaptive training techniques that can ensure AI models remain fair across diverse hardware systems and deployment scenarios.

In light of these findings, it is evident that advancing fairness in AI requires a holistic approach that considers both hardware and software components. By prioritizing fairness in hardware designs and training strategies, developers can mitigate biases and disparities in AI models, ultimately leading to more accurate and equitable outcomes. The research by Shi and his team sets a valuable precedent for future efforts aimed at promoting fairness in AI and underscores the need for continued collaboration between hardware engineers and AI researchers to achieve this goal.

Leave a Reply