Reasoning is an essential process that humans use to draw conclusions and solve problems. There are two main categories of reasoning: deductive reasoning and inductive reasoning. Deductive reasoning involves starting from a general rule or premise and using this rule to draw conclusions about specific cases. On the other hand, inductive reasoning involves generalizing based on specific observations. These two types of reasoning play a crucial role in how humans process information.

While numerous research studies have explored how humans use deductive and inductive reasoning in their everyday lives, the application of these reasoning strategies in artificial intelligence (AI) systems has received less attention. A recent study conducted by a research team at Amazon and the University of California Los Angeles delved into the fundamental reasoning abilities of large language models (LLMs), which are AI systems capable of processing, generating, and adapting texts in human languages. The study found that LLMs exhibit strong inductive reasoning capabilities but often lack deductive reasoning skills.

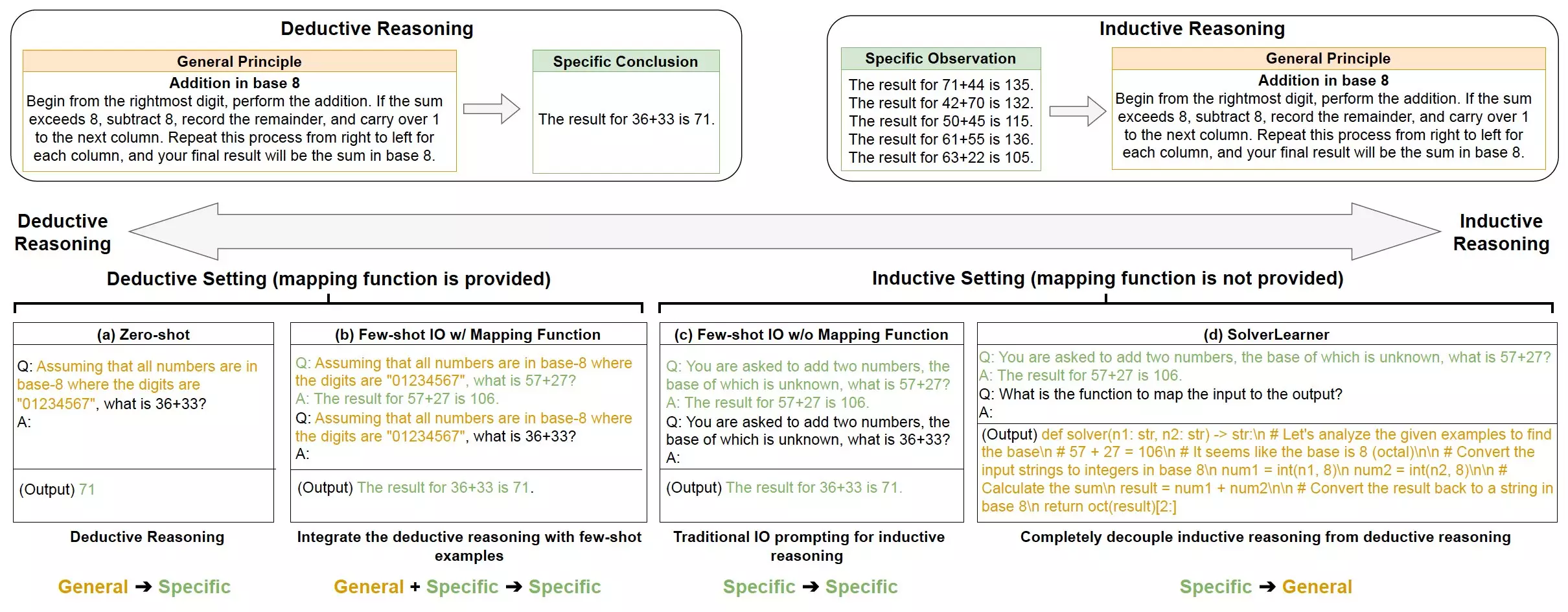

To distinguish between inductive and deductive reasoning, the researchers introduced a new model called SolverLearner. This model separates the process of learning rules from applying them to specific cases. By using external tools to apply the rules, the researchers were able to assess the inductive reasoning capabilities of LLMs more effectively. The study revealed that LLMs have stronger inductive reasoning abilities, particularly when faced with tasks involving “counterfactual” scenarios that deviate from the norm.

The findings of the study have important implications for the development of AI systems. While LLMs excel in inductive reasoning tasks, they struggle with deductive reasoning, especially in scenarios based on hypothetical assumptions or deviations from the norm. This suggests that leveraging the strong inductive capabilities of LLMs could be beneficial in designing AI systems such as chatbots. By understanding the strengths and weaknesses of LLM reasoning abilities, AI developers can optimize these systems for specific tasks.

Future research in this area could focus on exploring how the ability of an LLM to compress information relates to its inductive reasoning capabilities. By gaining a deeper understanding of the reasoning processes of LLMs, researchers can further enhance the performance of these AI systems. This research could pave the way for new developments in leveraging inductive reasoning in AI applications and improving the overall capabilities of LLMs.

The study highlights the importance of inductive reasoning in the functioning of artificial intelligence systems. By recognizing the strengths and weaknesses of LLM reasoning abilities, researchers can optimize these systems for specific tasks and improve their overall performance. Continued exploration of the reasoning processes of LLMs could lead to significant advancements in AI development and applications.

Leave a Reply