In an era where technology continually redefines our interactions with the world, one could ponder how this transformation might extend to mundane tasks, such as selecting the freshest apples at the grocery store. Surprisingly, a team of researchers at the Arkansas Agricultural Experiment Station has taken a significant step towards creating an application that seeks to enhance our ability to assess food quality through machine learning. Their groundbreaking research not only underscores the limitations of current technology compared to human adaptability but also provides a possible pathway for optimizing food presentation and processing through enhanced software designs.

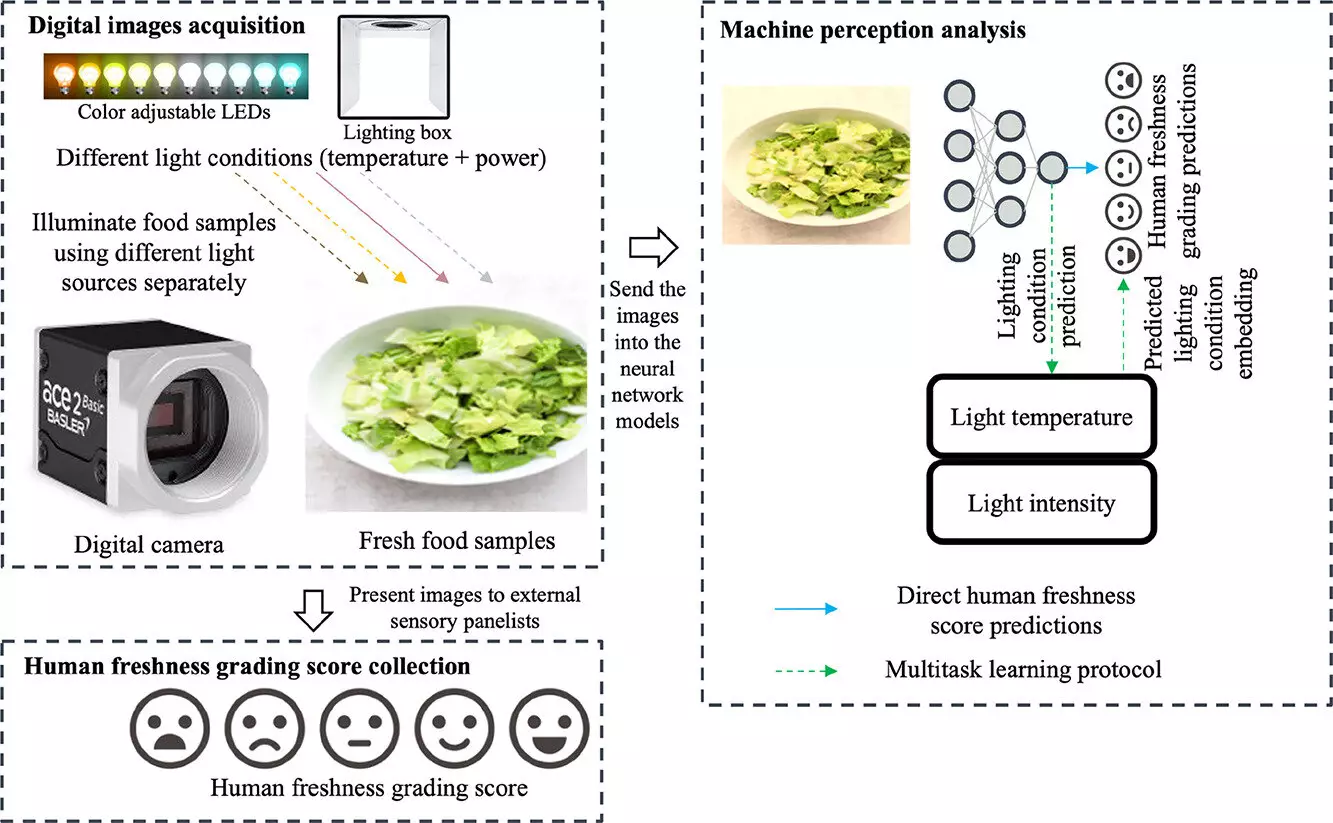

When faced with a plethora of options at the grocery store, people often rely on their instincts aided by sensory cues—sight, touch, and perhaps even smell—to make informed decisions. The research led by Dongyi Wang, an assistant professor specializing in smart agriculture and food manufacturing, reveals that while machine learning models hold promise in predicting food quality, they do not currently match the consistency of human evaluations, especially under varying environmental conditions. The core of the study sheds light on the importance of training computer models with data reflecting human perceptions, rather than solely relying on raw image data devoid of contextual cues.

The findings indicate that incorporating human insights can significantly enhance the predictions of machine learning models—reducing prediction errors by up to 20%. This breakthrough lays the foundation for future advancements in the field, including the development of more intuitive apps that assist consumers in choosing the best products available.

A key revelation from the study is the impact of illumination on human perception of food quality. The researchers discovered that lighting plays a pivotal role in how we interpret the appearance of food. For instance, warmer lighting often masks undesirable traits, such as browning in lettuce. By documenting how participants of varied ages assessed the quality of Romaine lettuce under different lighting settings, the study illustrates that our judgment can be swayed based on environmental factors. This aspect is not merely a trivial observation but a critical variable that must be integrated into machine learning models to achieve higher accuracy in food evaluations.

During the experiment, 109 participants analyzed 75 different images of Romaine lettuce over the course of five days, revealing intriguing insights into their grading processes. The systematic variation of photographic conditions aimed to build a robust dataset that compiles human responses to various brightness and color temperatures. This empirical data stands in stark contrast to traditional training methods that often utilize limited, unvaried criteria, thus opening avenues for a more nuanced understanding of consumer perception in food quality.

The integration of human perception into machine learning models presents an innovative approach that is currently lacking in the field of food technology. The study’s findings suggest that existing algorithms typically rely on “human-labeled ground truths”, often overlooking the complex conditions under which food quality is assessed. In a marketplace dominated by quick decision-making, this oversight could lead to misguidance for processors, retailers, and ultimately, consumers.

Harnessing the dual strengths of human insight and artificial intelligence paves the way for machine vision systems that can be used in food quality assessment across various products, from perishable goods to luxury items like jewelry. By refining the training processes of these machine-learning models to account for human variances and biases, industries can strive towards providing not just higher quality food, but also ensure that consumers are aided by reliable technological solutions in their day-to-day shopping experiences.

As we look towards a future increasingly intertwined with technology, it is crucial that advancements in machine learning take into consideration the complexities of human perception. The research carried out by Wang and his colleagues from the University of Arkansas marks a significant stride in bridging the gap between technology and human sensory evaluation in food quality assessments. By paving the way for more robust and accurate models, we can envision a world where selecting the freshest produce becomes an effortless task, ultimately enhancing our overall eating experience.

As buyers become more informed and technologies evolve, it’s only a matter of time before applications informed by such innovations arrive on the market—places equipped with the tools to aid consumers in making educated choices, ensuring that we enjoy the best of what nature has to offer while minimizing food waste.

Leave a Reply