Speech emotion recognition (SER) has emerged as a significant area of research and application within artificial intelligence, leveraging advancements in deep learning to detect emotional states from audio signals. This technology holds promise for enhancing user experience across various domains, including customer service, mental health, and entertainment. However, with the rise of these sophisticated models comes a host of potential vulnerabilities, particularly concerning their susceptibility to adversarial attacks. A recent study conducted by researchers at the University of Milan sheds light on this critical issue, revealing the inherent weaknesses of SER systems and emphasizing the need for a more robust approach to model training and evaluation.

The novel research explores the impact of adversarial attacks on SER models focusing on both white-box and black-box attack methodologies. Adversarial examples are subtle alterations to input data that can mislead machine learning models into producing incorrect outputs. The study published in “Intelligent Computing” indicates that SER models based on convolutional neural networks (CNNs) integrated with long short-term memory (LSTM) networks show notable vulnerability to these adversarial inputs. The keen observation that all assessed adversarial attacks significantly hindered the performance of SER systems raises concerns about the reliability of these technologies in real-world applications.

The research team undertook a systematic evaluation of various adversarial attack strategies, including both white-box and black-box methods. The experiments encompassed three prevalent datasets: EmoDB for German, EMOVO for Italian, and RAVDESS for English. While the white-box attacks, utilizing techniques like the Fast Gradient Sign Method and DeepFool, had full access to the internal mechanics of the models, it was the black-box attacks—particularly the Boundary Attack—that yielded surprisingly effective results with minimal insider knowledge. This finding is quite alarming, illustrating that adversaries could successfully compromise SER systems even without a comprehensive understanding of model specifics.

In addition to examining the types of attacks, the researchers also incorporated an analysis of the effects of gender and language on the performance of SER under adversarial conditions. Their findings indicated only slight variations in model performance between different genders and languages. Specifically, English data appeared to be the most vulnerable to adversarial manipulation, while Italian data exhibited greater robustness. Interestingly, even though certain male samples performed slightly better regarding accuracy under attack, the differences were generally minor, suggesting that the design of SER systems does not need to account significantly for gender variability.

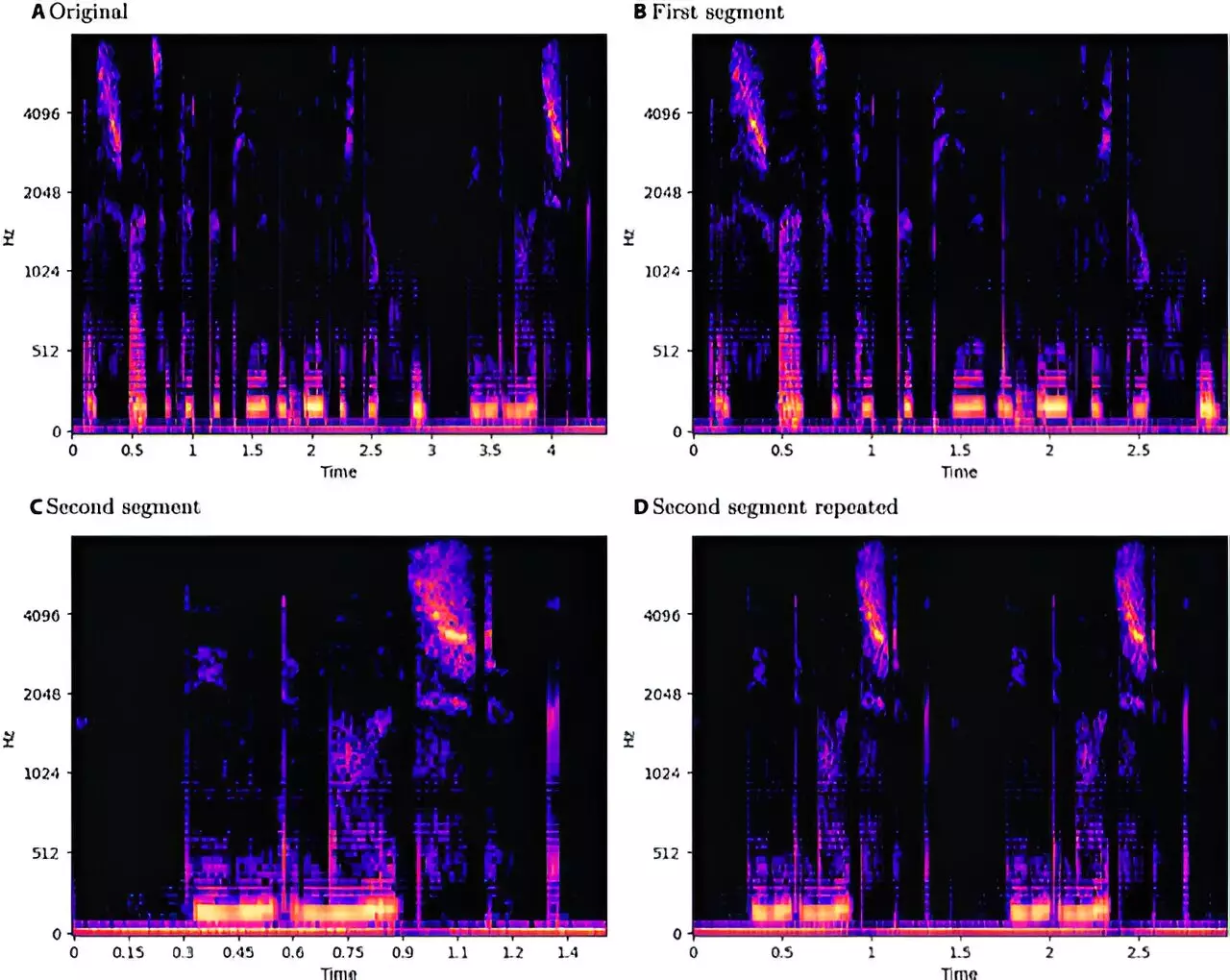

To ensure consistency across their experiments, the research team established a rigorous data processing pipeline tailored to the CNN-LSTM architecture employed in their studies. By standardizing samples across languages and implementing augmentation techniques like pitch shifting, they sought to mitigate variability that may arise due to the data’s inherent characteristics. Maintaining a maximum sample duration of just three seconds served as a tactical choice to streamline feature extraction, enabling more efficient model training and evaluation.

Amidst concerns regarding the implications of revealing the vulnerabilities of SER systems, the researchers advocate for transparency in documenting such findings. While sharing knowledge of weaknesses could potentially empower adversaries, the authors argue that withholding this information could be even more damaging. By openly discussing the vulnerabilities of SER technologies, the research community can work collaboratively to fortify these systems against potential threats, ultimately fostering a more secure environment for these technologies to thrive.

While speech emotion recognition presents exciting possibilities in various sectors, the vulnerability of these systems to adversarial attacks cannot be overlooked. The research conducted by the University of Milan highlights the urgent need for ongoing investigation into the weaknesses of these models. Moving forward, a focus on developing more resilient SER architectures and engaging in transparent discourse about their vulnerabilities will be crucial in ensuring their safe and effective deployment in real-world applications. As the landscape of artificial intelligence continues to evolve, so too must our strategies for safeguarding these essential technologies.

Leave a Reply