Artificial intelligence has become an essential component of modern technology, influencing everything from healthcare to finance. However, as these systems expand in complexity, so too does the energy required to train and operate them. The recent findings from researchers at the École Polytechnique Fédérale de Lausanne (EPFL) reveal not just another step forward in AI technology but a potential solution to one of the most pressing challenges in the field: energy consumption. The researchers introduce a programmable framework that significantly reduces the energy costs associated with optics-based AI systems, highlighting the urgent need for sustainable alternatives to conventional electronic systems.

The research predicts that unless the current trajectory of AI server production is altered, the energy consumption of these systems could exceed that of an entire small nation by 2027. This alarming statistic raises crucial questions about the sustainability of AI as it continues to permeate various sectors. As demand grows, it becomes essential to explore not just the capabilities of AI but also the ramifications of its exponential energy appetite.

The Power of Photons: A Paradigm Shift

For decades, scientists have experimented with optical computing, understanding that light can process information more efficiently than its electronic counterparts. Light, specifically photons, holds incredible potential due to its capacity to travel faster and requires significantly less energy. Yet, despite decades of research, optical systems have lagged behind their electronic rivals due to a key obstacle: achieving the complex nonlinear transformations needed for neural network functionality. These transformations are crucial because they allow systems to analyze and classify data in a more human-like manner.

The EPFL team’s research marks a paradigm shift. Their innovative approach manipulates the spatial properties of a low-power laser beam, allowing it to perform the nonlinear computations necessary for neural networks while using a fraction of the energy typically required—reportedly up to a staggering 1,000 times more power-efficient than state-of-the-art digital systems.

Innovative Methods for Nonlinear Computation

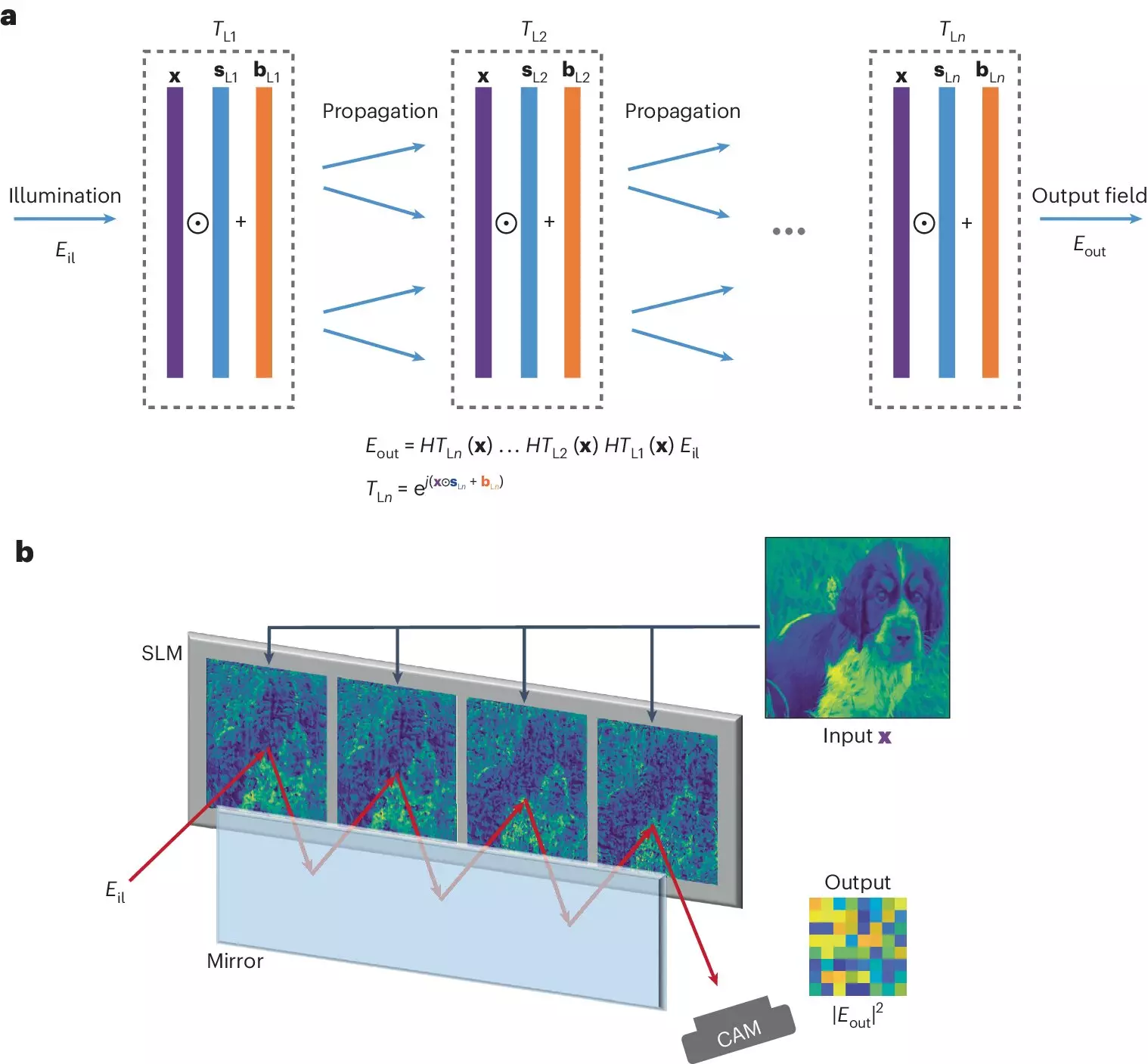

At the heart of this breakthrough lies an intricate yet elegantly simple encoding system for data, specifically images. Instead of relying on high-powered lasers to facilitate photon interactions, the researchers devised a method where the pixels of images are spatially encoded within a low-power laser beam. This beam reflects on itself multiple times, resulting in a cumulative mathematical operation—essentially squaring the input data. This process not only achieves the desired nonlinearity for neural network applications but does so at an energy cost that is eight orders of magnitude less than traditional electronic systems.

The implications of this method extend beyond efficiency; they suggest new pathways toward the implementation of future optical neural networks that can operate at unprecedented energy levels. By encoding pixels multiple times, researchers can increase both the non-linearity of the transformations and the precision of computations. Such innovations could radically alter the landscape of computational capabilities and potential applications across various sectors, from medical diagnostics to automated driving systems.

Challenges Ahead: Bridging the Gap

While the EPFL research is commendable, it is crucial to acknowledge the ongoing challenges that must be addressed for the full realization of these optical systems. The scalability of this technology remains a significant challenge. The goal is to create hybrid electronic-optical systems capable of further reducing energy consumption. However, the materials and hardware used in optical systems are fundamentally different from those of electronic systems, leading to unique engineering hurdles.

One major area that the researchers are tackling is the development of a compiler. This tool will be essential for translating digital data into a format that optical systems can interpret. Bridging this gap between the two types of systems implies that considerable engineering work is still on the horizon—a necessary step to make optical deep learning feasible on a large scale.

Shaping the Future of AI Technology

The EPFL team’s findings not only illuminate a path toward more energy-efficient artificial intelligence but also invoke a broader conversation about the future of technology and sustainability. As the digital age progresses, it is imperative to integrate solutions that balance computational power with energy efficiency. The efficacy of optical neural networks could offer the breakthrough needed to counteract the escalating energy demands that threaten to undermine the very advancements they provide.

Ultimately, this research represents more than just an incremental improvement; it heralds a potential revolution in how we approach AI and its intersection with energy consumption. In an era increasingly defined by ecological concerns, the challenge now lies in harnessing these innovations and ensuring they are brought to fruition effectively and sustainably.

Leave a Reply