In the realm of artificial intelligence (AI), the efficiency and speed at which models like ChatGPT operate play a crucial role in their success. These models depend heavily on sophisticated algorithms fueled by vast amounts of data processed through machine learning techniques. However, one of the most significant challenges faced in optimizing these AI systems is the von Neumann bottleneck. This limitation hinders data-processing capabilities and affects the overall performance of computational models. As technology advances and the volume of data continues to explode, it is imperative to seek solutions that can overcome these constraints and enhance the computational capabilities of AI systems.

The Role of In-Memory Computing in Addressing Limitations

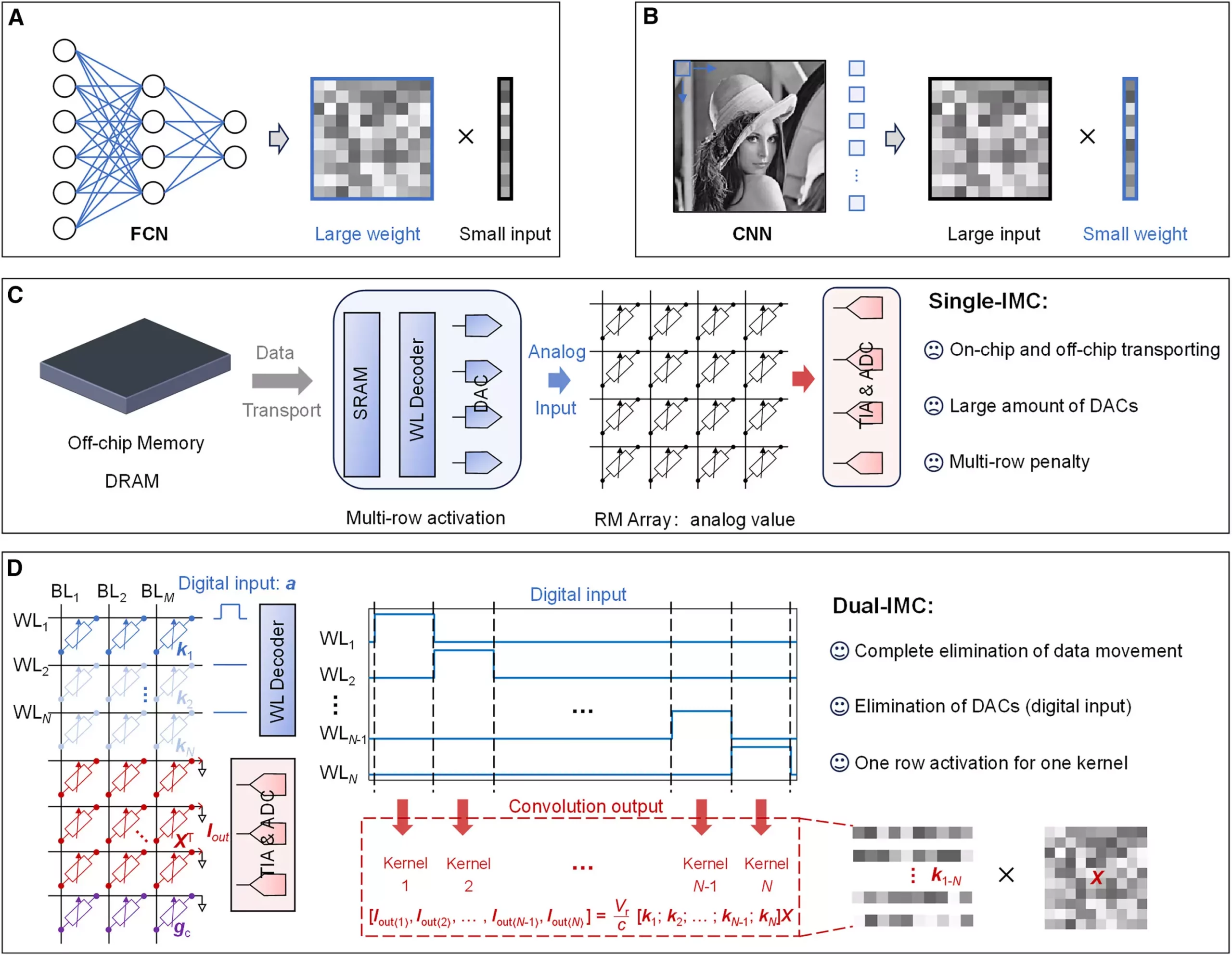

To tackle the von Neumann bottleneck, researchers are investigating innovative computing architectures. A recent study led by Professor Sun Zhong and his team at Peking University has proposed a groundbreaking approach known as the dual-in-memory computing (dual-IMC) scheme. This method aims to increase both the speed and energy efficiency of machine learning processes by addressing the traditional methods of data operations used in neural networks. Neural networks are at the heart of AI, simulating human brain functioning to make sense of complex data inputs like images and text. The dual-IMC scheme promises to revolutionize these operations by rethinking how data is processed and stored.

Traditionally, the solution to the von Neumann bottleneck has been the single in-memory computing (single-IMC) model. In this existing configuration, the neural network’s weights are stored on a memory chip, while inputs are processed externally. Although this model provides benefits, it also has inherent limitations, particularly regarding the significant time and power consumed in switching data between on-chip and off-chip resources. The inclusion of digital-to-analog converters (DACs) further complicates the process, adding to the circuit footprint and energy expenditure.

In contrast, the dual-IMC scheme eliminates these hurdles by storing both the weights and inputs of the neural network within the same memory array. By conducting all data operations in a fully in-memory manner, the dual-IMC effectively eliminates the need for off-chip dynamic random-access memory (DRAM) and on-chip static random-access memory (SRAM). This innovation results in a streamlined computation process that is not only faster but dramatically more energy-efficient.

In their research, Professor Zhong’s team put the dual-IMC scheme to the test using resistive random-access memory (RRAM) devices, focusing particularly on applications in signal recovery and image processing. Their results highlight several remarkable advantages over the traditional single-IMC system.

First and foremost, the dual-IMC allows for fully in-memory computations, which drastically reduces the time and energy drain associated with data movement. With data processing optimized and no need for frequent movement between memory types, the efficiency of applications utilizing dual-IMC can see significant improvement. Furthermore, the omission of DACs not only lowers production costs but also enhances the overall performance of the system by reducing computing latency and power consumption.

The Future of Computing Architecture and AI

As the digital landscape evolves, the demands for advanced data-processing capabilities continue to surge. The breakthroughs achieved through the dual-IMC scheme open new pathways for enhancing computing architectures and artificial intelligence systems alike. By resolving some of the fundamental limitations posed by traditional computing paradigms, this innovative approach holds the potential to transform how we approach machine learning, from training deep neural networks to deploying sophisticated AI applications.

As researchers like Professor Sun Zhong explore the frontiers of artificial intelligence and computing architecture, their discoveries underscore the importance of innovation in a rapidly changing technological landscape. The dual-IMC scheme may well mark a pivotal moment in the quest to enhance AI capabilities, driving forward a new era of efficiency and performance in data processing.

Leave a Reply