Human emotions represent one of the most intricate facets of our lives, filled with subtleties that can often elude even the most discerning individuals. Attempts to decipher emotional states expose the multitude of variables that define human experience. This complexity becomes exponentially more challenging when trying to impart this understanding to artificial intelligence (AI). Despite the profound advancements in technology, emotions cannot be easily categorized or confined to a rigid structure. Recognizing this complexity is crucial for establishing systems that can provide an accurate analysis of human emotional states.

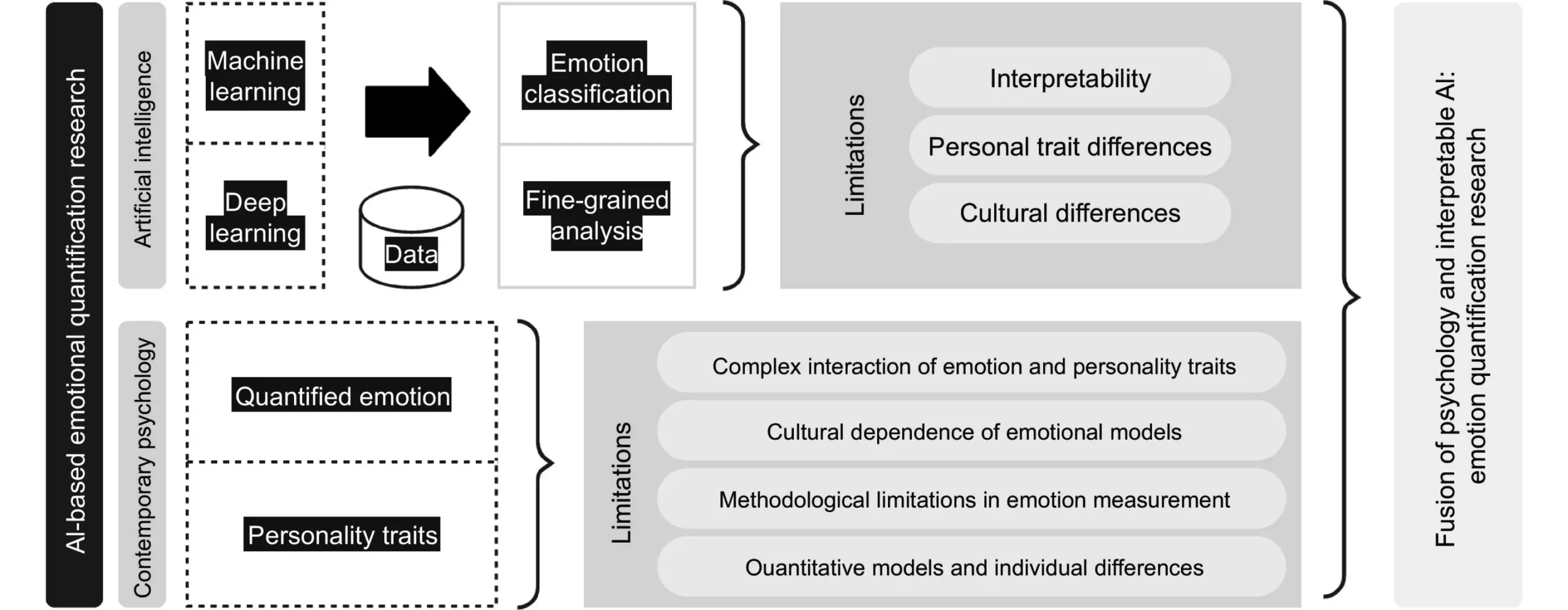

Within the realm of emotion quantification, considerable strides have been made in training AI systems to observe and interpret emotional signals. By leveraging both long-established psychological techniques and cutting-edge technology, researchers aim to construct a more effective framework for emotion recognition. The integration of traditional approaches with AI’s capabilities holds promise, especially in sectors like healthcare and education, where understanding emotional states can lead to significant outcomes in patient care, learning effectiveness, and customer satisfaction.

Feng Liu, a key figure in this research area, highlights the potential of these technologies to revolutionize various fields. The ability of AI to discern emotional inputs and respond accordingly would signify a paradigm shift in human-computer interaction. By synthesizing data derived from various emotional indicators, including facial expressions, gestures, and physiological reactions, AI systems could enhance their understanding of the emotional landscape, thus fostering more personalized interactions.

Innovative Approaches to Emotion Quantification

One noteworthy area of study is multi-modal emotional recognition, which aims to collate data from diverse sensory modalities such as sight, sound, and touch. This thorough approach allows AI to form a comprehensive image of a user’s emotional state. For instance, the use of electroencephalogram (EEG) data can provide insights into neural responses, while eye movement tracking captures visual cues, enabling a more nuanced understanding of emotions.

Moreover, physiological indicators like heart rate variability and galvanic skin response can serve as inputs to quantify emotional arousal. These multi-faceted measurements lend depth to the AI’s learning capabilities, allowing it to process emotions as a spectrum rather than as isolated instances. The fostering of interdisciplinary collaboration among AI researchers, psychologists, and other relevant fields is imperative for advancing this domain. Liu points out that such cooperation is critical in fully harnessing the capabilities of emotion quantification.

As the landscape of emotion recognition technology expands, ethical considerations become paramount. Critical concerns surrounding data privacy and transparency must be addressed, particularly given the sensitive nature of mental health and emotional well-being. Organizations that implement emotion recognition AI must adhere to robust data handling protocols to protect users’ personal information. This transparency not only safeguards individual rights but also reinforces public trust in these systems.

Additionally, emotion recognition technology must adapt to cultural variations in expressing and interpreting emotions. Different societies may exhibit distinct emotional expressions and narratives that an AI system needs to learn and respect. Ignoring these nuances risks undermining the technology’s reliability and relevance across diverse populations.

A Path Forward

As researchers delve deeper into the integration of AI in the field of emotion quantification, the potential applications raise exciting prospects. From improving mental health monitoring to creating adaptive learning environments in education, the benefits of successfully interpreting human emotions through technology are significant. By establishing comprehensive and culturally sensitive frameworks, the interplay of AI and emotional intelligence could lead to a transformative impact on how society understands and interacts with emotional complexities.

The successful evolution of emotion recognition technology hinges on a delicate balance between innovative research, ethical responsibility, and cultural sensitivity. As we loom closer to a future where AI can more accurately interpret and respond to human emotions, it is essential that these systems are deployed with care, foresight, and a strong commitment to the multifaceted nature of human emotion.

Leave a Reply