The field of artificial intelligence and machine learning has seen significant advancements over the years. However, scientists have continuously faced challenges when it comes to building and scaling up brain-like systems that can perform complex tasks efficiently. One of the major tradeoffs has been the speed and power consumption required to train computers to perform tasks such as complex language and vision recognition. In this article, we will explore a groundbreaking new analog system developed by researchers at the University of Pennsylvania that aims to address these challenges and revolutionize the field of machine learning.

In the past, artificial neural networks have shown promise in learning complex tasks, but the process of training these systems often requires a significant amount of time and power. Additionally, errors in the training process can rapidly compound, leading to inefficiencies. To overcome these limitations, researchers at the University of Pennsylvania previously designed an electrical network that was more scalable and could learn linear tasks effectively. However, this system was limited in its ability to handle more complex tasks that involved nonlinear relationships.

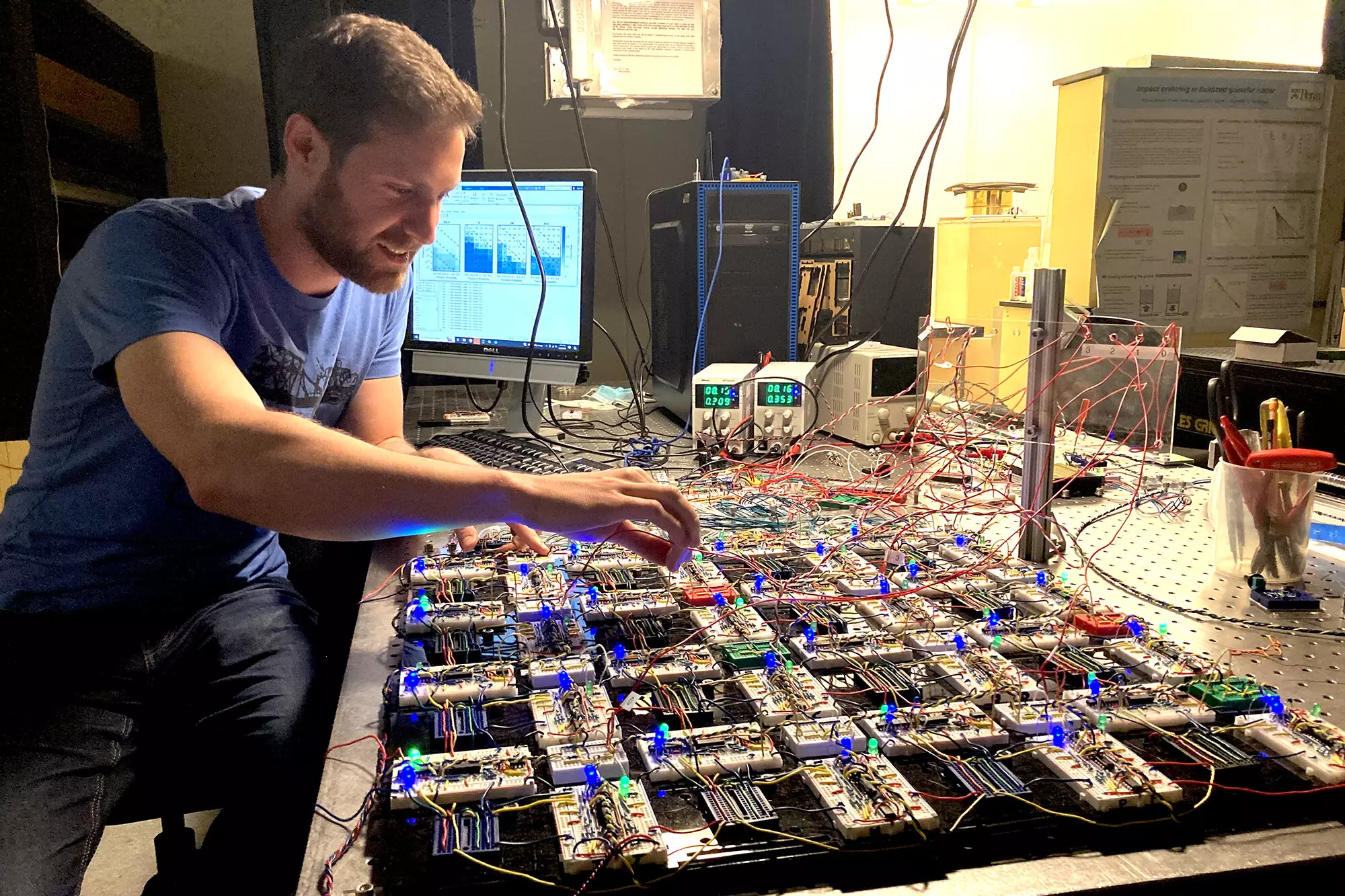

The researchers at the University of Pennsylvania have now developed an analog system known as a contrastive local learning network, which has the potential to revolutionize machine learning. This new system is fast, low-power, scalable, and capable of learning complex tasks such as “exclusive or” relationships and nonlinear regression. Unlike traditional neural networks, the components of this system evolve based on local rules without knowledge of the larger network structure, similar to how neurons in the human brain operate.

One of the key features of this analog learning network is its tolerance to errors and robustness in different configurations. This system can adapt to variations and is not limited by predefined network structures, opening up new opportunities for scalability and application in various fields. The analog nature of the system also allows for more interpretable learning mechanisms, making it easier to understand why the network makes certain decisions.

The potential applications of this analog learning network are vast, ranging from interfacing with devices that require data processing, such as cameras and microphones, to studying emergent learning in biological systems. Researchers are currently working on scaling up the design and exploring questions related to memory storage, noise effects, network architecture, and nonlinearity. The ultimate goal is to understand how learning systems change and evolve as they scale up, similar to the complexities observed in the human brain.

The development of the contrastive local learning network represents a significant breakthrough in the field of machine learning. This analog system has the potential to revolutionize the way complex tasks are learned and performed, with implications for a wide range of applications. As researchers continue to explore the capabilities of this system and scale up its design, the possibilities for future advancements in machine learning are endless. This analog learning network could pave the way for a new era of intelligent systems that are not only efficient and scalable but also interpretable and adaptable.

Leave a Reply