As of August 1, the European Union (EU) implemented groundbreaking legislation known as the AI Act, designed to regulate artificial intelligence technologies. This pioneering framework acknowledges the potential risks associated with AI, particularly in sensitive domains such as healthcare and finance. A research team led by computer scientist Holger Hermanns from Saarland University and law expert Anne Lauber-Rönsberg from Dresden University of Technology has conducted comprehensive research to assess the measure’s effects on software developers tasked with creating AI-driven applications.

The results of their analysis, notably featured on the arXiv preprint server, serve as a vital resource for programming professionals eager to understand the ramifications of this extensive 144-page regulation. “This act signifies a crucial step in recognizing the dangers that AI could pose, particularly in scenarios where it intersects with critical human interests,” Hermanns remarked. However, a pressing question remains among programmers: how will this legislative framework directly impact their workflows?

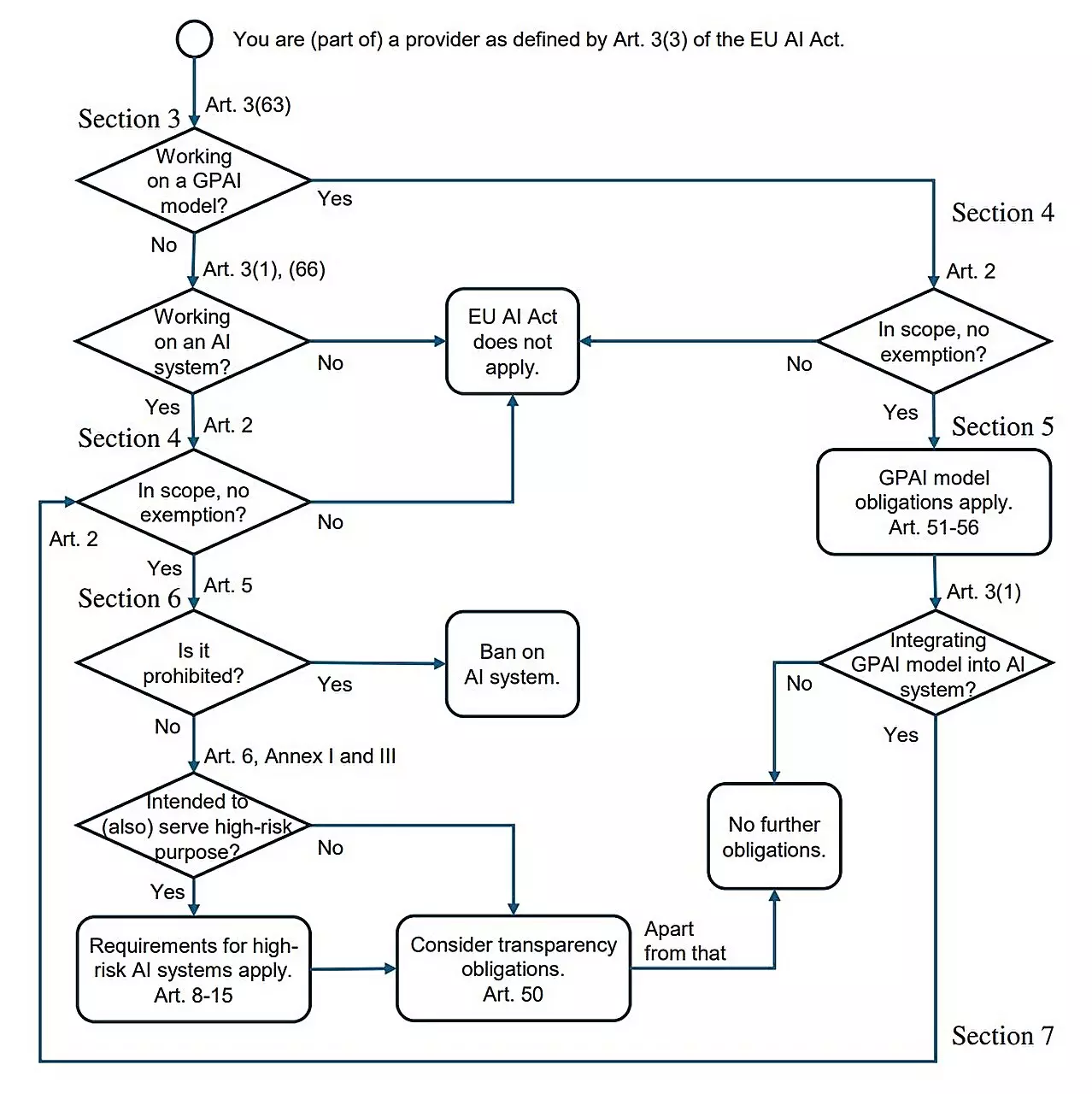

One significant issue highlighted by Hermanns is the widespread uncertainty among programmers regarding the practical implications of the AI Act. Few developers have the time or resources to delve into the entirety of the legislation. To address this conundrum, a research report was generated titled “AI Act for the Working Programmer,” co-authored by Hermanns, along with doctoral researcher Sarah Sterz, postdoctoral scholar Hanwei Zhang, and Lauber-Rönsberg.

Sterz summarizes the central findings of this research, suggesting that for most developers and typical users, the AI Act may not necessitate drastic changes in their existing practices. “In essence, only those involved in developing high-risk AI systems will need to adapt their approaches significantly due to the new regulations,” she notes. This categorization of high-risk versus low-risk AI systems forms the core of the AI Act’s strategic implementation.

The essence of the AI Act lies in its delineation between high-risk and low-risk AI systems. For systems deemed high-risk, such as those utilized for applicant screening or healthcare diagnostics, strict compliance measures are enforced to safeguard users from potential discrimination or harm. Hermanns explained that if a system is intended to scan job applications—potentially filtering out qualified candidates prior to human intervention—it must adhere to a rigorous set of standards once it is operationalized or made available to the market.

On the contrary, lower-risk applications—such as algorithms enhancing gaming experiences or filtering spam—face far less regulatory scrutiny. In these cases, developers can continue their work without significant interference from the AI Act. The distinction serves as a crucial guiding principle for programmers evaluating the relevance of the legislation to their projects.

For those who do fall under the high-risk category, the AI Act sets forth a range of essential compliance requirements. Programmers are instructed to ensure the quality and diversity of training data, thereby actively preventing biases that may result in discriminatory outcomes. Additionally, these systems must maintain comprehensive logs that enable event reconstruction, similar to how flight recorders capture data from airplane operations.

Sterz emphasized that thorough documentation of the system’s attributes is crucial, mirroring the expectations found in traditional user manuals. This level of meticulousness ensures that users can adequately oversee AI implementations, enabling the early detection of errors and facilitating corrective measures.

The overarching sentiment among researchers like Hermanns is one of cautious optimism regarding the AI Act. While there are significant restrictions placed upon high-risk systems, the everyday workloads of most developers will remain largely unchanged. Activities that are currently unlawful, such as employing facial recognition technologies for emotional profiling, will continue to be prohibited under the new regulations. Hermanns believes that this balance between regulation and innovation will position Europe competitively within the global landscape of AI technology.

Moreover, there is reassurance that the AI Act does not stifle ongoing research and development efforts, whether in public or private sectors. Looking ahead, programming professionals can embrace the structured legal landscape as a framework that could enhance trust in AI systems, ultimately fostering more profound public engagement and acceptance.

The EU’s AI Act represents a crucial milestone in the discourse surrounding ethical AI use while mitigating potential risks. By understanding the act’s implications, programmers can adapt to the evolving agency within a framework that encourages responsible innovation.

Leave a Reply